Markerless AR functionality allows developers to create digital applications that overlay interactive augmentations on physical surfaces, without the need for a marker.

We can all agree that computer vision is a key part of the future of augmented reality, mobile or not. That’s why we’ve been working so hard on our Instant Tracking over the last years. If you are not yet familiar with this feature, Instant Tracking creates the perfect digital recreation of real world things anytime, anywhere without the user having to scan any image.

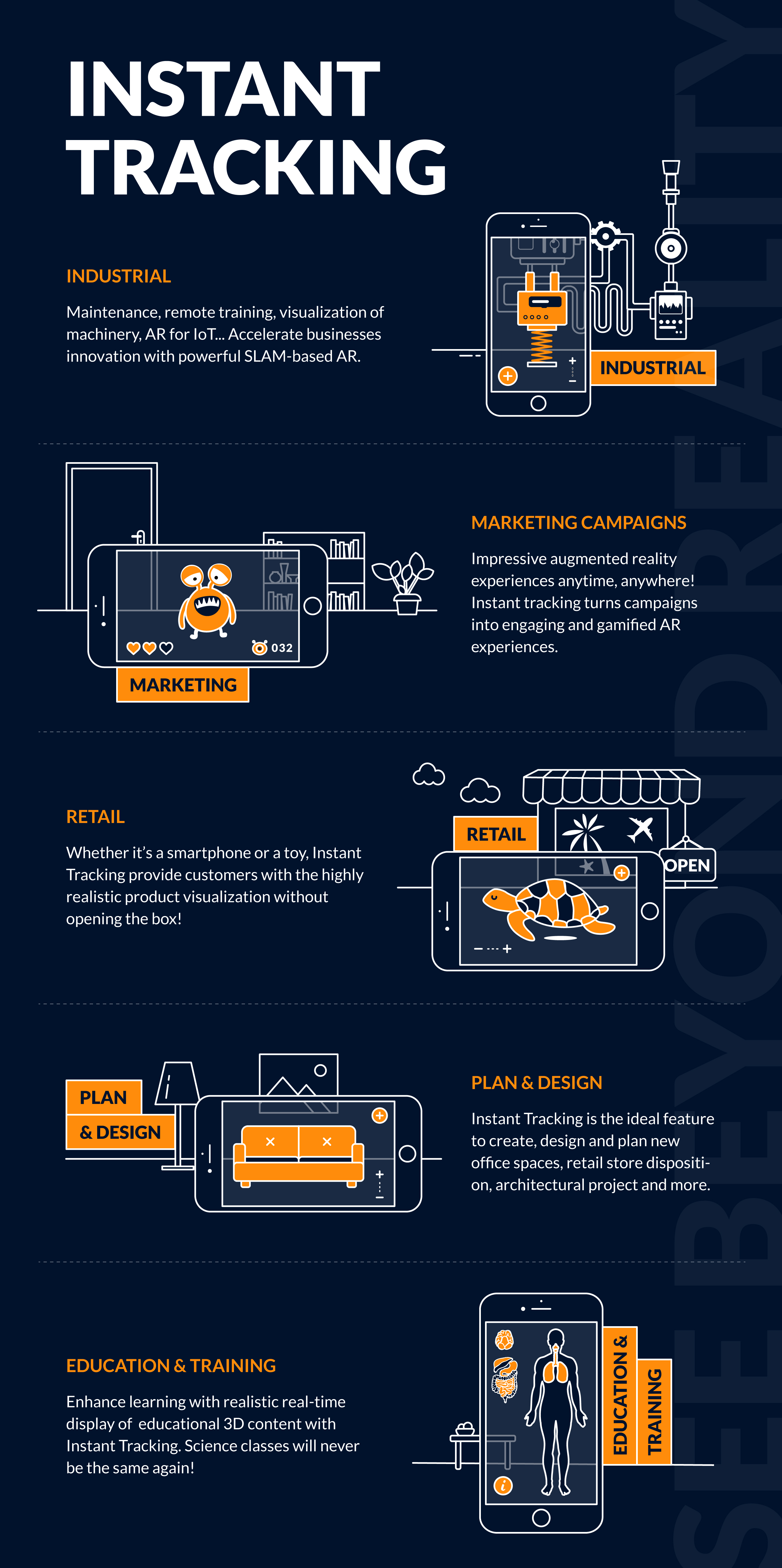

Instant Tracking is also the first feature using Wikitude’s Simultaneous Localization and Mapping (SLAM) technology. SLAM identifies the user’s precise location within an unknown environment by simultaneously mapping the area during the Instant Tracking AR experience. This allows developers to easily map environments and display augmented reality content without the need for target images or objects (markers). Wikitude’s SLAM markerless augmented reality tracking is one of the most versatile cross-platform 3D-tracking systems available for mobile. Our SDK also offers its own SLAM Instant Tracking technology which can be dynamically connected to ARKit and ARCore (Wikitude SMART). SMART is a seamless API which integrates ARKit, ARCore and Wikitude’s SLAM engine in a single cross-platform AR SDK. This feature helps developers create their projects in either JavaScript, Unity, Xamarin, PhoneGap, and Flutter without the need toto deal with specific ARKit/ARCore code. SMART dynamically identifies the end user’s device and decides if ARKit, ARCore or Wikitude SLAM should be used for each particular case.Here are some interesting use cases for Wikitude’s markerless augmented reality feature:

Where you need to grab someone’s attention, immediately

Getting someone to look at your product is the first step of a good marketing strategy. For both marketing and retail implementations, augmented reality offers immense opportunity to do that. It’s new, easy to understand, and impossible to ignore.

Do you know the first time most of the living population saw the concept of augmented reality (although they probably didn’t know it then)? This scene with Michael J Fox in Back to the Future II.

Maybe it’s not as slick and refined as today’s visual effects, but back in 1989, it was certainly surprising – and attention-grabbing. That’s part of the way AR still works today – especially for the next couple years as wide-spread adoption still continues to grow. The most important thing to remember? If you truly surprise someone, they’ll be sure to tell everyone they know all about it.

The potential here for both retail outlets (online and in physical world) is clear – customers can interact directly with the product, and come as close they can to touching and feeling it without having it in their actual hands.

Even more opportunity exists in the gaming and entertainment – check how Socios.com gives sport fans an opportunity to collect crypto tokens, earn reward points and unlock experiences with their favorite sport clubs.When you need to add one small piece of information

AR is at its best when it does just what it says: augment. AR can turn your phone into a diagnostic tool of unparalleled power – perceptive and reactive, hooked into the infinite database of the world wide web.

Adding a few, small, easy to understand bits of information to a real scene can simply help our mind process information so much more quickly – and clearly. Here’s a great example where an automobile roadside assistance service can help a customer diagnose a problem – without actually being anywhere on the roadside.

The opportunities here are endless – factory floor managers, warehouse workers, assembly-line technicians – anyone who needs real-time information, in a real-world setting. It’s a huge technological leap forward for the enterprise – just like when touchscreen mobile devices with third-party apps first appeared.

Where you need to show a physical object in relation to other objects

There’s a reason this idea keeps coming up – it solves a real-world problem, instantly, today.

Architecture, interior design – any creative profession that works in real world spaces can take advantage of augmented reality.

From visualizing artworks or virtually fitting furniture in your living room , the benefit here is clear – we can understand how potential real-world space will look and function so much better when we can actually see the objects we’re thinking of putting there – while we are there.

This last bit is why mobile AR is so important – if we want to make AR a practical technology, we have to be able to use it where we live, work, build and play, and we don’t want to drag a computer (or at least, a computer larger than your smartphone) everywhere we go to do it.

Here’s an example of placing designer clothing in a real-world setting, done by ARe app and powered by Wikitude:

Opening up an endless opportunity to showcase products of any size (from industrial machines to cars and jewellery), markerless AR enables a new level of shopping experience, that can take place directly on the customer’s mobile device at any time. Such options as 360 degrees product viewer, custom features annotations and 24/7 access allows customers to configure and compare products, communicate with merchants and shop in the comfort of their homes.

So be creative in your AR applications – and do something surprising. Developers all over the world are already using Wikitude technology to build AR apps that grab attention and customers – and it’s already making their lives easier.

Want to dig in deeper? We’ve collected a few of our favorite use cases in the infographic and a list of apps already using the technology on this YouTube playlist. Have a look and see what inspires you to make something inspiring!

Looking how to get started with Markerless AR?

Interested in creating an AR project of your own?

Talk to one of our specialists and learn how to get started.

Support

Support FAQ

FAQ